Copilot dilemma

I'm circumventing my policy of never talking about AI by instead talking about Github Copilot, simply a VS Code extension. Okay, not really, I'm talking about AI, and I hate that I'm here adding to the noise.

I had a conversation with Fuchs recently about this and, in short, my dilemma is this: that while I object to current AI tooling on many levels, it strikes me as a technology and a trend that is here to stay and, by taking a principled stand, I harm my current employer and my current cause while not making any real impact on the trend itself. In other words, I'm only hurting myself and my employer by opting out.

A brief summary of some of my reservations are:

- Large language models are built on other peoples' work who did not opt into having their work inform these tools and benefit OpenAI and co. By using tooling built on AI I'm taking advantage of their efforts without giving them any credit

- By making queries and submitting my code I'm doing the same thing with my organization's information and resources–I'm submitting that information to be injested and re-sold to other people

- I'm not convinced that the people profiting off of these tools are building and selling them while taking into account the dangers they pose. No that's not true, I'm certain they're not taking those things into consideration

- I work on climate change and I've read articles that lead me to believe that these tools are horrendous from an energy-to-output perspective

- I have a very strong default negative response to breathless hype-cycles

The first bullet deserves special consideration here. I really appreciate the whole open-source ecosystem. I've written about it at some length on this site–about how it enables small nonprofits like mine to have an outsized impact. People work away for free on these staples of the tech world with the idea that their labor is freely assisting groups like mine who then build on them and have an impact on society. Or, to look at it from another vantage point, sites like Stack Overflow build free open forums to assist developers like me with our work and, because they are freely-available, get vacuumed up by companies like OpenAI to inform language models which then out-compete Stack Overflow and put a bunch of people out of work. This feels like an insidious bastardization of open source in that it relies on policy being behind technology. To me these AI development tools would be utter shit without Stack Overflow. The people who submitted answers on Stack Overflow assumed their answers were freely-available. They never consented to their answers being the thing that powered a $20/month LLM that made a handfull of tech bros rich.

All of this is separate from the arguably more devastating effect that products like Midjourney have had on the graphics/art world or ChatGPT have had on the writing world. These models would be nothing without the art that people have put out there for the public to enjoy for free and yet they are putting artists and animators out of jobs in huge numbers. I don't know if my feelings are out of touch or not, but I'm very uncomfortable with what's happening with AI. A naive best guess is, again, that this is simply something that's enabled when advancements outpace policy.

Der Läufer had a devastatingly simple and compelling response to my dilemma. To paraphrase: personal and professional expediency has been used to justify all sorts of bad behavior, if it's morally wrong, then you shouldn't do it. That's simply true. Absolutely true.

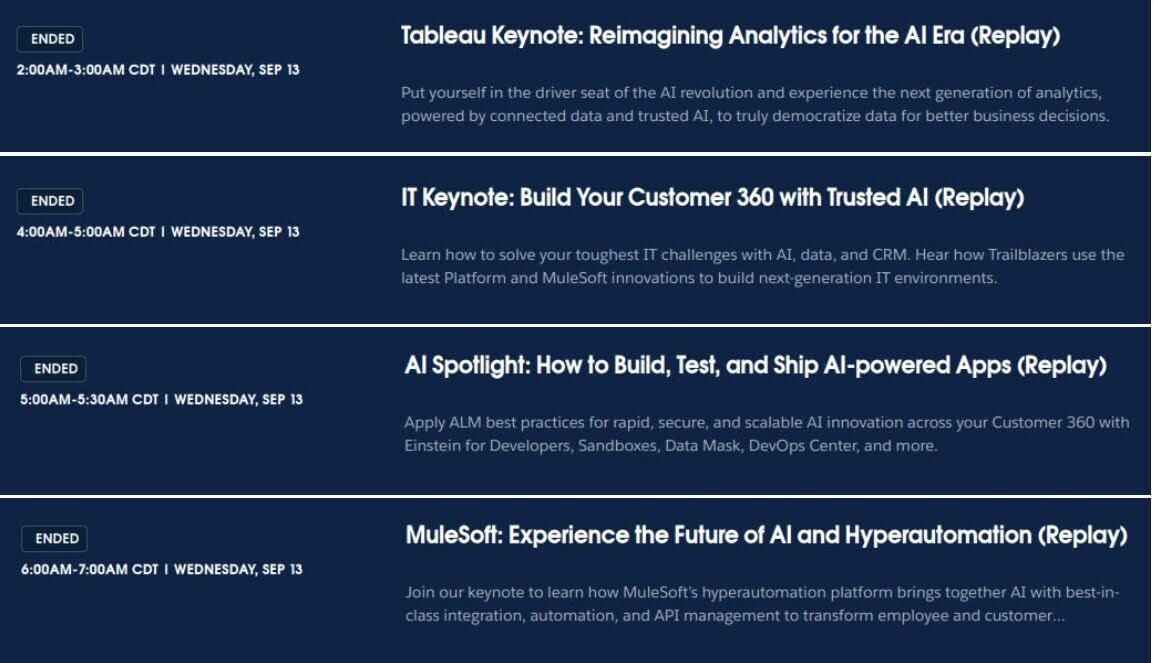

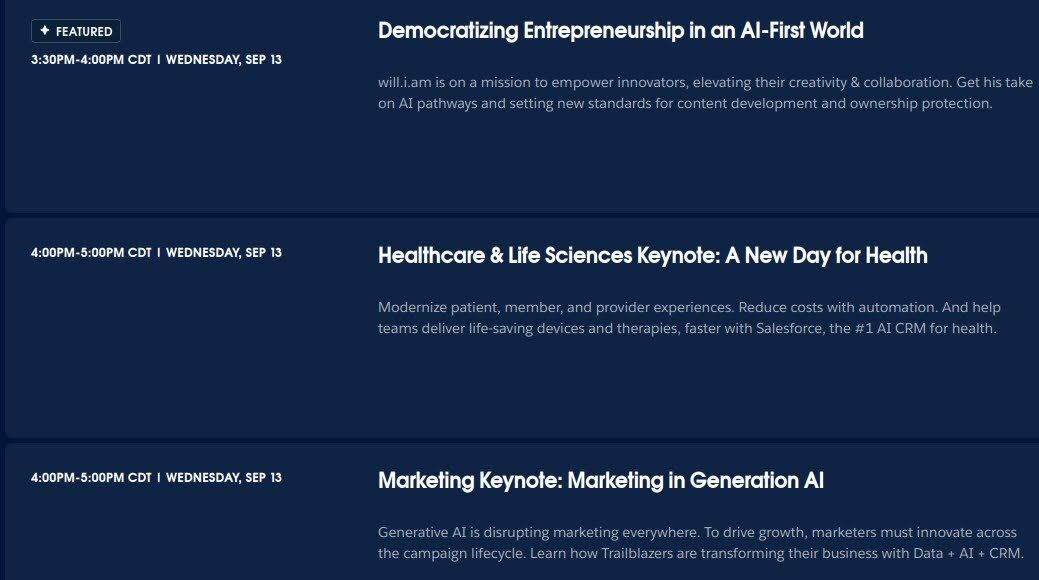

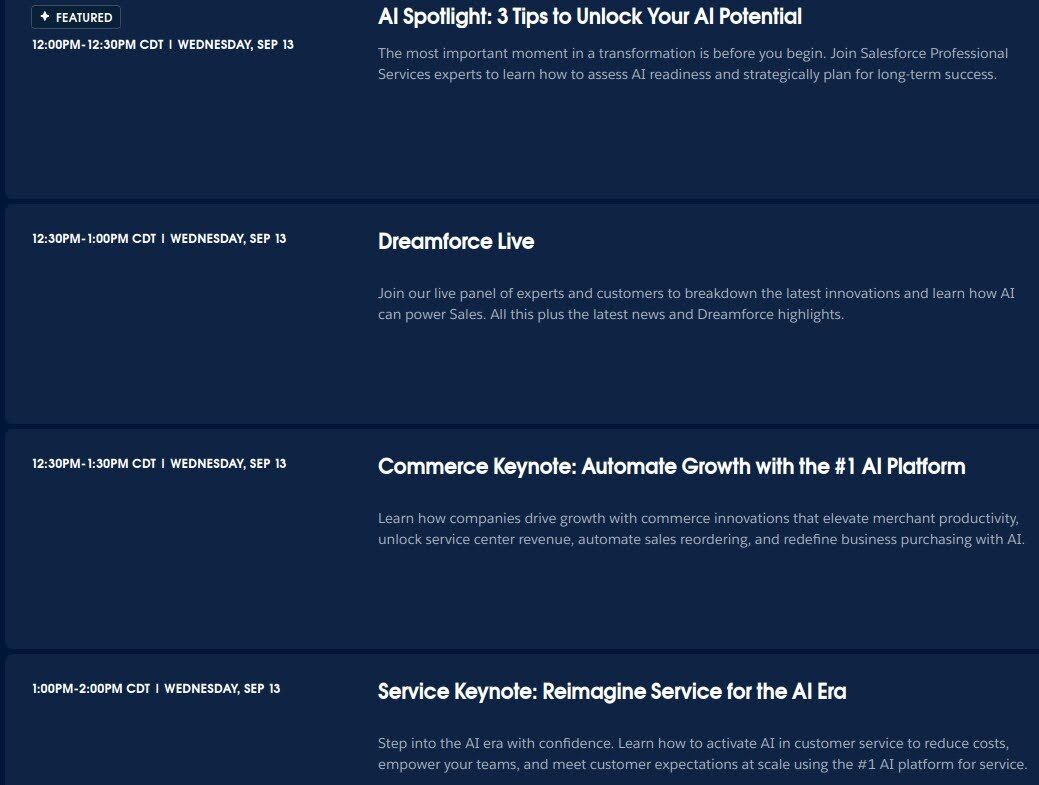

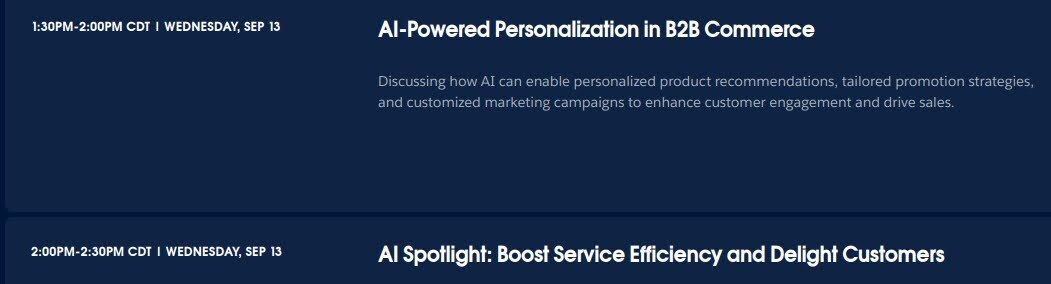

Since then, however, it seems that the entire tech world has moved right on past me. Just about half of my job is to maintain a CRM system. The most recent annual conference for said CRM system looked like this just a couple of months ago:

Subtle much? I'm sensing a theme...

Recent Harvard Business Reviews, Linux magazines, professional development podcasts, and software training platforms have all highlighted AI as a must-learn skill. On top of that, non-AI podcasts I listen to have also been highlighting this. People I trust who have been AI skeptics are basically saying "yep, you need to know this to do your job". As someone focused on productivity, who thinks a lot about keeping up to date, this is agonizing.

The funny thing is that, after an afternoon at Barnes and Noble, where I read no fewer than 3 magazines all 90%-focused on AI, I decided I should probably jump in. But, as soon as I'd biked home, I saw multiple podcasts highlighting how Sam Altman had just been ousted as OpenAIs CEO. I still don't know exactly why but several people have suggested that it's due to safety concerns. In other words, the board ousted him because they thought that he's moving too fast to monetize this stuff without thinking about safety. That does not make me feel any better.

Anyway, I'm still in limbo. I'm not sure whether I'm the old programmer saying the equivalent of "machine code has been good enough for me, so it should be good enough for you" or whether I should jump in and embrace change in spite of my reservations. This is just a tough weird moral and professional space. It doesn't help that so much seems up in the air right now. Like why did AI have to come in and add to the drama these days?

I've tried to think of analogies and am struggling but one does come to mind. In the climate space I have a friend and former co-worker I very much admire who happens to be a Mennonite. For a good while he completely opted out of driving or taking public transportation because of the harm that fossil fuels do to the environment. Eventually he realized that opting out gave him a false sense of effectiveness or agency. To make a true difference he needed to travel–to get to people that needed to hear what he had to say. Is that the right way to think about AI? Should I opt in to tools like Copilot to become a more efficient developer so that I can have a bigger impact? Or should I opt out because the mental/moral contortions I'd use to justify jumping in are exactly the problem?