Best Practices?

The software industry is a noisy and frantic place, and I've noticed that I'm not immune to the chaos. I wrote in a previous post about the damage that I think is being done by an outdated notion of software as an inaccessible field to many people. In some sense I think there's a similar phenomenon within the industry itself pointed at professional developers - at lease insofar as what the software industry would have you believe is required for you to be following "best practices." I've struggled for as long as I've been in this field to parse out what's legitimate good advice, what's hype, and what's pure gatekeeping.

At this point in my career I've seen enough trends come and go that I feel justified in my cynicism. I remember when you weren't a "real programmer" unless you graphed out your entire system in advance in UML. Similarly, you were a fraud if you weren't using GoF patterns and following SOLID principles for just about any solution. And you were behind as a developer unless you were learning Flash or Java Applets or Silverlight.

I've certainly dove head first into several of these trends. And of course, some really do take off. It's hard to argue with all of the job postings out there for data scientists and people with AWS knowledge. But there's so much noise, and an ambient uneasy feeling of always being behind. "JavaScript Fatigue" is a fun and descriptive phrase that's come out of this to describe the overwhelm that comes from having a dozen frameworks and tools that you should know in order to do your job well and call yourself a web developer. If you're like me, and make a passing attempt at having goals in life beyond work, you're lucky if you can stay up to date just in your core competencies. It takes a good long while to pivot and pick up a new framework or technology, and after a couple of times where you've done that and been burned by watching it turn obsolete you become very wary.

Some few recent examples that come to mind are Angular, Webpack, and WebGL. All of these were touted as "must know" concepts for any serious developer. To be sure, all of them are legitimate technologies, that are still going strong with people making careers out of working with them. But in all of these cases I tried to "get ahead" only to find alternatives come out and render my knowledge obsolete. Parcel and integrated tools replaced Webpack, React took the place of Angular, and animation libraries like ThreeJS are what I've used instead of raw WebGL.

All of this is aside from what I would consider to be true hype - the IOTs and Edge Computings and Blockchains of the industry. Again, I don't think these are nonsense per se, but they do tend to get billed as the "must know" technology that's about to make you unemployed.

Recently I've heard this put in a way that makes a lot sense to me. It was in the context of a conversation around service-oriented architecture (SOA), or micro-services. For reference, SOA is an architecture that involves breaking the parts of your application up into distinct stand-alone systems. These disparate systems can then talk to each other through well-defined APIs, scale independently, and have their own teams to maintain them. A consultant was being interviewed who mentioned that he spent a good amount of time undoing SOA deployments for teams that had implemented them because they became too complicated and unwieldy. His take is that micro-services are a great solution for companies like Netflix or Google, which are operating at massive scale and have hundreds of developers working on them. Micro-services allows those companies to scale the separate parts of their system independently, and have dedicated teams to work on them. But taking that model and trying to apply it for a small organization with a modest number of developers introduces needless complexity and frustration, and the benefits (such as easy scaling) might not even be applicable to the system. Similarly, in an interview on Talk Python, Ravin Kumar, author, former developer at SpaceX and senior developer at SweetGreen voices a similar point of view:

The right way is what works for you to do the thing you need to do. And the thing Google is doing most likely is the wrong way for you. [...] if you're running Hadoop on your Excel file [that's definitely] the wrong way.

I think this phenomenon is what's happening more broadly. The kinds of technologies and industry practices that are being highlighted online, at conferences, and in online learning platforms, are the kind being utilized by big FAANG companies. Not maliciously necessarily, these are the cutting-edge technologies that people working at such companies are building and working on to solve their unique problems. And developers at these companies are the ones being invited to conferences to talk about how they're pushing the industry forward. The end result is that discussions are skewed towards the exotic, towards streaming databases and micro-services and protocol buffers, when almost no one really needs those solutions.

A perhaps unpopular question I have is whether the same concept goes for many popular programming practices. I'm thinking of concepts like the stringent application of agile/scrum, code reviews, and test-driven development (TDD). I've seen all of these concepts, to various degrees, picked up and then dropped again at places I've worked. It might be that I myself wasn't invested enough, and it might be that not applying these universally is a flaw in my approach to the craft. I've heard strong and convincing arguments for all of these practices and I'm certainly not saying they're in the category of hype. I have a fair bit of guilt about not applying them – especially TDD – at all times in my day-to-day work.

But I've been mulling over whether concepts like these are – as with micro-services and streaming databases and the like – issues of scale. I've almost always operated as a team of one, or at most three. And, even when I am working with people it's generally in entirely separate parts of a given app. Similarly, I've almost never found myself with the space to fully implement practices like 100% code reviews or 100% test coverage.

I love books like Clean Code, Code Complete, and Design Patterns, and I'm a fan of the Software Craftsmanship concept. These are books and schools of thought that encourage a deliberate and professional approach to development. There's a lot of hastily made, frail code out there. Sometimes such code does a lot of damage, and results in a bad user experiences and disdain for software in general. But, when I reflect on the day-to-day work that I typically do, I feel less like an architect building a cathedral and more like a construction worker trying to hastily erect a prefab house before a storm hits, or a plumber trying to fix a leak in a preexisting home.

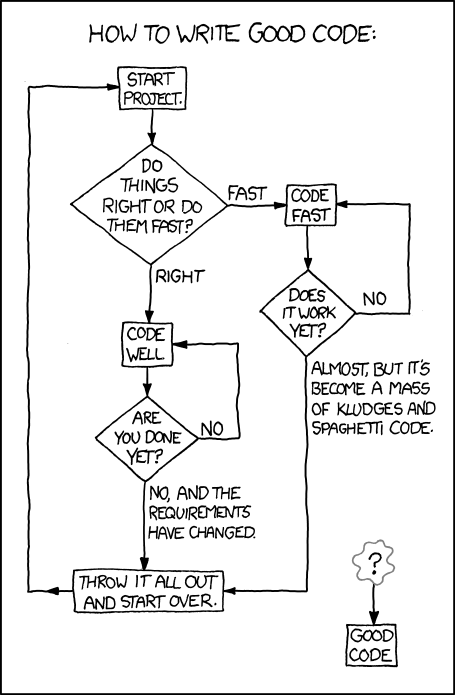

How to Write Good Code, from XKCD

"But don't you take pride in your work? Don't you want your code to be reliable and maintainable?" Absolutely, I've have the rare good fortune of working at places where I care a great deal about both the organization and the mission. I want the organizations to succeed, and work accordingly. On top of that, I'm in a field that I love, and would say that my preoccupation with software isn't always healthy or well-balanced. But I'm constantly confronted with by the calculation of achieving organizational goals and accruing technical debt. Certainly I haven't always picked the right balance, and maybe it's not even fair for me to judge practices that I haven't seen implemented well. But I will say that it seems to me that there's a strong bias out there towards tools and practices created by and for enterprises operating at scales and with constraints that don't make sense for a majority of companies. I think that mismatch does a lot of damage. When you're already confronted with small budgets, short time-frames, and limited staffing, it's incredibly painful to implement solutions that you find out later don't really fit.

I know I've spent a fair bit of time agonizing over the idea that I'm not "doing it right". I've written a lot of hasty code that the software craftsmen and women of the world would wince at. But when you're working for a non-profit or an agency with a hard deadline and a fixed budget the bullet-proof cathedral approach isn't always viable.

That's my real beef. I don't think that realistic constraints tend to be accurately reflected in the training and literature out there - whether it be in terms of time, money, or company size. To return to our building analogy, it's as if all of the available guides were about how to build and maintain an industrial HVAC systems, and none were about how to replace a leaky faucet.

Scott Hanselman has spoken very eloquently about how the average developer is often overlooked. He coined the term "Dark Matter Developers" on a blog post that every developer should read. He says

Point is, we need to find a balance between those of us online yelling and tweeting and pushing towards the Next Big Thing and those that are unseen and patient and focused on the business problem at hand.

He points out that there are many developers out there happily working with old technology and punching out at 5:01pm who "will never read this blog post because they are getting work done using tech from ten years ago and that's totally OK." I completely agree with him, but would just add that there's also a large segment of developers out there who do pay attention, would love to improve their practice and apply better solutions to their problem, but when they look around are only seeing those "Next Big Things" that were never meant for their situation.